Hello All!

As you can probably tell–I’ve spent a great deal of time this week hacking my Edublogs WordPress CSS. It involved opening up the source code in Firefox’s debugger and figuring out all the various tags, <DIV>’s I could change around–it was surprisingly difficult and time-consuming. Often, simply targeting id’s or classes wouldn’t work on overriding the built in CSS and required strange workarounds. I still couldn’t find a way to change the background color that actually worked; I had to change it in the actual WordPress dashboard–which let’s me change the background color of 3 things–the background one of them. There are still a few issues I’m working through today–some unexpected side effects. The z-indices are breaking some of the <a> links(they’re hiding behind a layer that I’m still attempting to figure out). Hopefully it won’t take me too long today–but I want to get this finished today. Why? Because I need to work on other things that are more long-term important–like the 3D environment, which has seen some pretty good progress as well.

Brennan, Alexandra, Thomas and I had a pretty great meeting earlier with Michael Page from Emory who is already working on a reconstruction of Atlanta in the 1930s on a much larger scale. It will be great to share resources with them, and our project will dovetail nicely, because as it turns out–we’re using the same engine, Unity, to build and environment. We figured out some obstacles and challenges in our discussion that I think can be overcome. 3D modeling is one of them. I’m unfortunately not very good at 3D modeling–I can do it–but it would take me a week to do what would take someone more skilled a few hours. We also have to think of 3D modeling in terms of available hardware resources–Attempting an exact replica of architecture down to the finest detail can be both time-consuming and unusable. Unusable is a pretty strong word so what do I mean by that? I mean that if we have a city block filled with high polygon buildings(in the millions), no one can actually interact with it–arguably even on a powerhouse computer. It could be rendered frame by frame into video–but real-time rendering is problematic. Video game development solves this problem through approximations and tricks that use textures with bump mapping to emulate 3D detail that doesn’t actually use more polygons. So–what is the point behind me giving this caveat? We have to take the extra time to figure out how to best represent the buildings using as few polygons as possible so that the program can be as accessible to as many people possible who have varying hardware configurations. This process takes a little more time and involves software like GiMP or Photoshop to make the textures and bump maps. We have the option of ‘baking’ light maps as well. This is process of artificially lighting a texture as if a light source were rendered on it–but not actually using a light source, which takes more hardware resources to process. These light maps are normally best for static environments where light sources don’t change–ie. an environment with real-time sun rendering that changes shadows over time–which I don’t think we’ll need.

I wanted to touch on the ‘science’ behind 3D modeling this week for the blog as well to share what I’ve been researching and playing around with. I have very little experience in true 3D programming, which differs from 3D modeling in that one is procedurally generating a model through code rather than building one in a UX environment in 3DSMax or Blender. There are three main components to a 3D object: vertices, triangles, UV points. I think faces could be included–but usually these are figured out through the triangle-stripping. So let’s start with vertices:

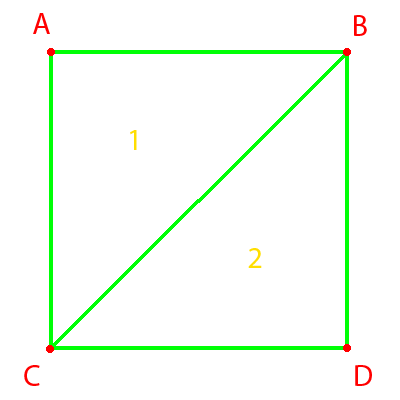

I made this image to help visualize the process going on–don’t be afraid of it. We have 4 vertices, ABCD. Vertices are pretty straight-forward. They can be 2D or 3D. In this example–we’ll make them 2D because it makes explaining it a little more easily. We’re working with what’s called a ‘plane’ or a flat surface in 3 dimensions–that means, if we remember geometry, that the height of the object is ‘0’. The X and the Y coordinates of the object will change but there will be no extension in the the 3rd dimension–the Z value will remain 0. In theory–we could simply make a 3 vertex triangle–but a square plane helps show the process of triangle stripping. We need 4 vertices –but vertices alone tell us nothing about the object we’re making–how do these vertices connect? It might be easy to assume with 4 vertices–but once you’re dealing with a few thousand, there are hundreds of ways various vertices could be linked. The next step is telling the model–through triangles, how the vertices connect.

I made this image to help visualize the process going on–don’t be afraid of it. We have 4 vertices, ABCD. Vertices are pretty straight-forward. They can be 2D or 3D. In this example–we’ll make them 2D because it makes explaining it a little more easily. We’re working with what’s called a ‘plane’ or a flat surface in 3 dimensions–that means, if we remember geometry, that the height of the object is ‘0’. The X and the Y coordinates of the object will change but there will be no extension in the the 3rd dimension–the Z value will remain 0. In theory–we could simply make a 3 vertex triangle–but a square plane helps show the process of triangle stripping. We need 4 vertices –but vertices alone tell us nothing about the object we’re making–how do these vertices connect? It might be easy to assume with 4 vertices–but once you’re dealing with a few thousand, there are hundreds of ways various vertices could be linked. The next step is telling the model–through triangles, how the vertices connect.

We can only define triangles in 3D programming–not squares. One could define a square as ABDC, but in programming we use the smallest polygon available–a triangle. So how do we represent a square with triangles? Two right-isosceles triangles. So let’s look at T(triangle)1. We could label it as ABC, BCA, CAB, CBA, BAC, or ACB. How are we going to choose the order of the vertices? It actually makes a big difference: the ‘face’ of a polygon is determined by a clockwise order of vertices. To illustrate: if I look at this plane head on like the picture: two things can happen–either I see the front of the plane(a face) or I see the back of the plane(it isn’t visible). Faces are the visible sides of a triangle(s). The order of he vertices is how the model determines what side is the front and which is the back. If I ordered the vertices as CBA, BAC, or ACB–if I stare at this square–I couldn’t see it. It would exist but the face would be facing opposite of me–I would have to rotate the object in 3D space to see the ‘back’ of it which I made visible instead of the front. By using a clock-wise ordering of the triangle–I’m telling the model: “This is the front face of the triangle.” So we could order the vertices as ABC, BCA, or CAB. That’s 3 ways–which one should we use?

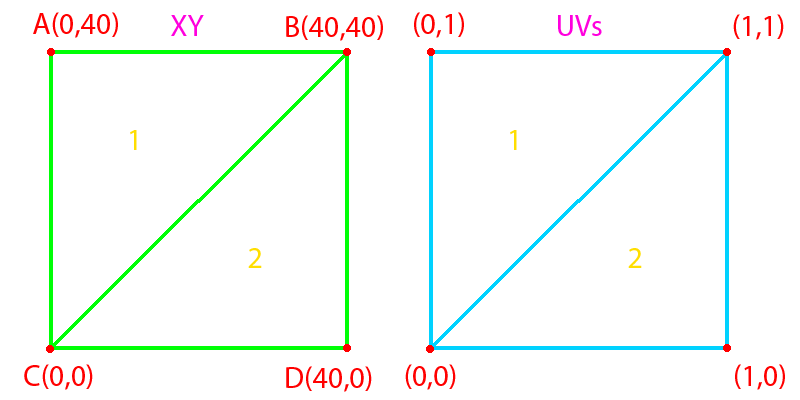

This gets us into triangle stripping–triangles attached by joined sides. We obviously want to join the BC shared side–because we can’t join it any other way and the model wouldn’t work right(I believe–I’m no expert but I’m under the impression it’s impossible to not join a usable side). So we now known we have 2 triangles that can be ABC, BCA, CAB for T1 or BDA, CBD, DCB for T2. How do we link these two triangles? What if we do this: CAB, and CBD. Does it work? Yes–because CAB and CBD share the same 2 vertices and both follow a clock-wise ordering. When the model reads these two triangles that both contain 3 vertices–it will strip them together into the shape of a square plane. So this is a pretty basic, hopefully not too complicated way of showing a 2D plane–how do we texture it now? That’s what UV coordinates are for(not Ultraviolet =P). They use the same vertices but tell the model how to apply a texture on the triangle faces. Let’s say this square at full scale represents 40 pixels on a screen–we could make a texture that is 40px x 40px and apply it to the mesh(the model) but the mesh won’t have any way of knowing how to apply that texture. We also have to tell the mesh how to apply a texture.

Surprise: UV coordinates also need to follow a clock-wise motion. So how would we define the full 40px x 40px texture on our plane? We use the point ordering: ABDC. Why start with A? it depends on how we program the vertices into an array–in this case, I listed the vertices in order ABDC when making the plane–therefore the UV coordinates need to match that order. This can seem confusing because the triangles aren’t using all 4 vertices–they only use 3–so where do I get this order from? I initially–in code–fill an array(a sequential list) with my vertices. I chose to store them in the ABDC order. When I define triangles–I’m using the same vertices I already defined in that array. UV coordinates also use the same vertices, and follow the same order in the array. If you feel lost on that–don’t worry and welcome to my world. I’ve been wracking my brain for a few weeks now trying to apply this information to 3 dimensions and feel like I’m losing my mind–I’m nearly there though. So the last thing about UV coordinates–how does it know to use the outer 4 corners of the texture? It doesn’t–you also have to define that(nothing is easy in this process lol). A UV coordinate set uses a percentage to define the area of the image. So if we have a square that is 40 x 40 units our coordinates would be ABDC: (0,40), (40,40),(40,0)(0,0). These won’t work for UV coordinates since UVs are based on a proportion of the image(this will make more sense in a second). Remember when I said I added ABDC in a sequential array(list) ? That means A = 0, B = 1, D = 2, C = 3. We start with 0 in programming rather than 1 which can be confusing, but in our array, these 4 points will always correspond to this exact order. UV coordinates rely on matching this same order but use a ratio. If I wanted to use the whole texture–I would define 4 UV points: (0,1),(1,1),(1,0),(0,0) — if you think of the 1 as 100% and 0 as 0% and the point of origin as the bottom left coordinate(it always will be the bottom left corner in UV textures)–we might be able to see what’s going on here.

Basically–the orders have to match because the first point in the UV list has to correspond to the first point in the XY coordinate list and for every other corresponding point. The logic behind this follows as: For point 1 in the XY list, set the top left (100% up in this case) of the image to that coordinate. For the second coordinate (B), set the top right (100% to the right and up) of the image to that coordinate. For the 3rd point (D) set the bottom right (100% right and 0% up) to that coordinate, and for the 4th point, set the bottom left corner of the image (0% up and 0% right) to that coordinate. Using %’s for UVs let’s us customize things a bit–like if I wanted to only us the bottom left corner of my image I could set my 1’s to 0.5s(50%) and it would stretch the bottom left quadrant of my texture to the entire plane.

Basically–the orders have to match because the first point in the UV list has to correspond to the first point in the XY coordinate list and for every other corresponding point. The logic behind this follows as: For point 1 in the XY list, set the top left (100% up in this case) of the image to that coordinate. For the second coordinate (B), set the top right (100% to the right and up) of the image to that coordinate. For the 3rd point (D) set the bottom right (100% right and 0% up) to that coordinate, and for the 4th point, set the bottom left corner of the image (0% up and 0% right) to that coordinate. Using %’s for UVs let’s us customize things a bit–like if I wanted to only us the bottom left corner of my image I could set my 1’s to 0.5s(50%) and it would stretch the bottom left quadrant of my texture to the entire plane.

So that’s my knowledge of 3D programming in a nutshell at the moment–which is limited but the time I’ve invested in going deeper into this for the past 2 weeks, I think, deserves some blogging about to tell everyone the process we’re using for our 3D environment of Decatur St. Given–we will be using 3D modeling software more than this coding–but having a deeper grasp on the math and coding behind 3d models allows me to better understand and customize the environment for our project. If you have any other questions about this topic–feel free to ask! Sorry for such a long post, but I hope it’s interesting and explained well enough for others to understand–it definitely took me a while at first.

Cheers,

Robert